In the previous post, I wrote about expectation, a great tool that helps us capture notion of “center” for a random variable. In this post, I will write about variance, another great tool that represents the “spread” of a random variable.

Consider a random variable ![]() with two probability density functions (PDFs)

with two probability density functions (PDFs) ![]() and

and ![]() , shown in the figure below.

, shown in the figure below. ![]() under both PDFs has the same expected value (i.e.,

under both PDFs has the same expected value (i.e., ![]() ). But,

). But, ![]() has a larger “spread” compared to

has a larger “spread” compared to ![]() . In other words, samples drawn from

. In other words, samples drawn from ![]() deviate more from their mean than samples drawn from

deviate more from their mean than samples drawn from ![]() . Variance helps us represent this notion of spread from mean.

. Variance helps us represent this notion of spread from mean.

Definition:

The variance of a random variable ![]() is represented by

is represented by ![]() and is defined by the expected value of the squared deviation from the mean

and is defined by the expected value of the squared deviation from the mean ![]() :

:

![]()

![]() is also often represented by

is also often represented by ![]() as the variance is the square of the standard deviation denoted by

as the variance is the square of the standard deviation denoted by ![]() .

.

The variance can also be expanded as:

![Rendered by QuickLaTeX.com \begin{align*} \var{x} &= \E[(x - \mu)^2] \\ &= \E[x^2 - 2 \mu x + \mu^2 ] \\ &= \E[x^2] - 2\mu\E[x] + \mu^2 \\ &= \E[x^2] - 2\mu\mu + \mu^2 \\ &= \E[x^2] - \mu^2 \\ &= \E[x^2] - \E[x]^2. \end{align*}](http://latentspace.cc/wp-content/ql-cache/quicklatex.com-a361c2da9ecba3084bfdcca178dcc0c2_l3.png)

Variance is closely related to covariance that represents the linear dependency between two variables. In fact, it is easy to see that:

![]()

Properties:

Sign: Variance is always non-negative:

![]()

Shift invariance: Variance is invariant to shift:

![]()

where ![]() is a constant.

is a constant.

Scaling: Scaling a random variable by ![]() , scales the variance by

, scales the variance by ![]() :

:

(1) ![]()

Sum: The variance of sum/minus is:

![]()

where ![]() denotes the covariance between two random variables. This can be easily generalized to the sum of many random variables:

denotes the covariance between two random variables. This can be easily generalized to the sum of many random variables:

![]()

If all ![]() are independent or uncorrelated, we have

are independent or uncorrelated, we have ![]() ,

, ![]() . This results in:

. This results in:

(2) ![]()

Example:

Assume ![]() is a Normally-distributed random variable with mean

is a Normally-distributed random variable with mean ![]() and variance

and variance ![]() , i.e.,

, i.e., ![]() . We use a Monte Carlo estimate of the mean by drawing

. We use a Monte Carlo estimate of the mean by drawing ![]() samples from the distribution denoted by

samples from the distribution denoted by ![]() :

:

![Rendered by QuickLaTeX.com \[ \hat{\mu} = \frac{1}{N} \sum_{n=1}^{N} x^{(n)} \]](http://latentspace.cc/wp-content/ql-cache/quicklatex.com-ecf72491f0d271c797c9a2fd8bc61592_l3.png)

what are the mean and variance of ![]() ?

?

First, we should note that ![]() itself is a random variable. In other words, it has its own distribution with its own mean and variance. Second,

itself is a random variable. In other words, it has its own distribution with its own mean and variance. Second, ![]() is a stochastic estimation of

is a stochastic estimation of ![]() . i.e.,

. i.e., ![]() is fixed in our problem, but

is fixed in our problem, but ![]() depends on the set of samples

depends on the set of samples ![]() . If we calculate

. If we calculate ![]() using a different set of samples, its value will be different.

using a different set of samples, its value will be different.

Mean: Let’s use what we learned in the previous post to derive the mean of ![]() :

:

![Rendered by QuickLaTeX.com \begin{align*} \E[\hat{\mu}] &= \E[\frac{1}{N} \sum_{n=1}^{N} x^{(n)}] = \frac{1}{N} \sum_{n=1}^{N} \E[x^{(n)}] = \frac{1}{N} \sum_{n=1}^{N} \mu = \mu \end{align*}](http://latentspace.cc/wp-content/ql-cache/quicklatex.com-71fd465f888f768f9921d03f00e45a6a_l3.png)

So, the expected value of ![]() is

is ![]() .

.

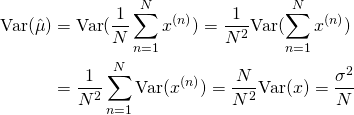

Variance: Since the set of samples are drawn independently, we can use the sum and scaling properties (Eq.1 and 2):

As you can see, the variance of ![]() depends on the sample size. As the sample size grows

depends on the sample size. As the sample size grows ![]() goes to zero. In fact the sum of Normally-distributed random variables has a Normal distribution itself. Thus, we have

goes to zero. In fact the sum of Normally-distributed random variables has a Normal distribution itself. Thus, we have ![]() . As

. As ![]() increases, the distribution of

increases, the distribution of ![]() which is centered at

which is centered at ![]() , becomes narrower, and as a result,

, becomes narrower, and as a result, ![]() approaches

approaches ![]() .

.

Why variance is important in training:

Recall that in the previous post I mentioned that many training objective functions take the form of an expectation. The gradient of those objective functions is also in the form of an expectation. Since in general, we don’t have an analytic expression for the expected value of the gradient, we typically use a stochastic estimation of the gradient for optimizing the objective function. In this case, the variance of the gradient estimator plays a crucial role in training. High gradient variance can extremely slow down the training progress.

In practice, many factors impact the variance of a gradient estimator. Here, we are going to analyze the effect of the training batch size. Let’s consider a model with a single parameter. We represent this model by ![]() where

where ![]() is an input instance and

is an input instance and ![]() is the parameter. For example, our model can be as simple as a linear function

is the parameter. For example, our model can be as simple as a linear function ![]() . Let’s denote the target variable by

. Let’s denote the target variable by ![]() and the loss function measuring the mismatch between the model output

and the loss function measuring the mismatch between the model output ![]() and target

and target ![]() by

by ![]() . The training objective is to find

. The training objective is to find ![]() that has the lowest loss in average. This is formulated as minimizing the following expectation:

that has the lowest loss in average. This is formulated as minimizing the following expectation:

![]()

where ![]() is the joint distribution of input and target variables. We can use a gradient-based optimization method to minimize

is the joint distribution of input and target variables. We can use a gradient-based optimization method to minimize ![]() . For this, we require computing the gradient of the loss function:

. For this, we require computing the gradient of the loss function:

![]()

As you can see both the objective function ![]() and the gradient

and the gradient ![]() are in the form of an expectation.

are in the form of an expectation.

We don’t have the joint ![]() explicitly. Thus, we cannot compute the expectations analytically. However, the training dataset

explicitly. Thus, we cannot compute the expectations analytically. However, the training dataset ![]() are samples drawn from the joint

are samples drawn from the joint ![]() . We can define a Monte Carlo estimate of the gradient using a mini-batch of

. We can define a Monte Carlo estimate of the gradient using a mini-batch of ![]() randomly-selected training datapoints:

randomly-selected training datapoints:

![Rendered by QuickLaTeX.com \[ \hat{g} = \frac{1}{M}\sum_{i=1}^{M} \frac{\partial}{\partial w} l(f_w(x^{(i)}), y^{(i)}), \]](http://latentspace.cc/wp-content/ql-cache/quicklatex.com-e745269ed73682ad71c05764294ee045_l3.png)

where ![]() is the stochastic estimator of

is the stochastic estimator of ![]() . As we saw in the example,

. As we saw in the example, ![]() where

where ![]() . We generally don’t have

. We generally don’t have ![]() , so we cannot quantify

, so we cannot quantify ![]() . However, it is easy to see that

. However, it is easy to see that ![]() reduces as the mini-batch size increases. In other words, increasing the training mini-batch size, in average, brings

reduces as the mini-batch size increases. In other words, increasing the training mini-batch size, in average, brings ![]() closer to the true gradient.

closer to the true gradient.

Summary

In this post, we learned about variance and its basic properties, and we saw how we can use them to derive the mean and variance of an estimator. In the next post, we will dig deeper into an ML problem to better understand how these fundamental concepts play together during training. If you like to stay updated with the future posts, you can use the form below to subscribe.