Machine learning (ML) is filled with various forms of mathematical expectations. It is not exaggerated to say that almost all training objective functions in ML are in the form of an expectation. In this blog post, I will review some basics on expectations to create a foundation for my next posts on probabilistic ML.

I personally believe that everyone should learn to use expectations for making personal decisions. Estimating the expected value of an outcome and the variance associated with it is typically used for analyzing investment opportunities by professional investors.

Simple Example:

Imagine you want to buy a lottery ticket for $2 and you would like to know what you will win on average. You know 1 million tickets are sold as part of this lottery and the prizes are:

- $1M for 1 person

- $10,000 for 10 people

- $1,000 for 100 people

- $100 for 1,000 people

Let’s look at the probability of each event:

- You win $1M with probability 1 in one million

- You win $10K with probability 1 in 100,000

- You win $1K with probability 1 in 10,000

- You win $100 with probability 1 in 1,000

- You win nothing with probability

Let’s represent each of these events using ![]() . We know for example

. We know for example ![]() . Let’s also represent the prize you win with

. Let’s also represent the prize you win with ![]() , i.e.,

, i.e., ![]() $1M if

$1M if ![]() . The expected value of the prize you will win is:

. The expected value of the prize you will win is:

![]()

You can think of this equation as averaging the values of an outcome with weights equal to the probability of that outcome. So, rare outcomes are weighted with smaller weights. The expected value of the prize is:

![]()

So on average, you will win $1.3 by paying $2!

What amazes me here is that the number $1.3 cannot be seen easily in the list of the prizes. By looking at this list you may think that “at least you will win $100 which is 50 times what you invested ($2)”.

Definition:

If ![]() is a discrete random variable with

is a discrete random variable with ![]() representing the set of all possible values of

representing the set of all possible values of ![]() , the expected value of

, the expected value of ![]() given that

given that ![]() has distribution

has distribution ![]() is denoted by:

is denoted by:

![]()

Sometimes this notation is simplified to ![]() or even

or even ![]() if the distribution of

if the distribution of ![]() is implied.

is implied.

If ![]() is a continuous random variable where

is a continuous random variable where ![]() is the sample space of

is the sample space of ![]() , the summation is replaced by an integral:

, the summation is replaced by an integral:

![]()

Here, ![]() is the probability density function for random variable

is the probability density function for random variable ![]() . For example if

. For example if ![]() has a Normal distribution, its sample space is the space of all real numbers

has a Normal distribution, its sample space is the space of all real numbers ![]() . In this case the integral is written as:

. In this case the integral is written as:

![]()

Properties:

Let’s go over some of the properties that are commonly used in ML:

1) Mean: If ![]() , then

, then ![]() is the mean of the random variable

is the mean of the random variable ![]() .

.

2) Constant: If ![]() is constant, i.e.,

is constant, i.e., ![]() , the expected value is also constant:

, the expected value is also constant:

![]()

3) Linearity:

![]()

where ![]() and

and ![]() are two random variables.

are two random variables.

4) Jensen’s inequality: If ![]() is a convex function, we have:

is a convex function, we have:

![]()

In other words, if you apply a convex function ![]() to the expected value of a random variable, it will be less than or equal to the expected value of the function. The direction of the inequality changes if

to the expected value of a random variable, it will be less than or equal to the expected value of the function. The direction of the inequality changes if ![]() is a concave function. I will discuss this inequality in more details in another post.

is a concave function. I will discuss this inequality in more details in another post.

Computing an Expectation:

In many ML problems, we are often required to compute the value of an expectation. Here, I review three different approaches to tackle this problem. For this section, let’s assume we are interested in finding the expected value of a Normal random variable with mean ![]() and standard deviation

and standard deviation ![]() . That is to say find:

. That is to say find:

![]()

1) Know your functions:

Solving an expectation is the same as taking the integral of a function. This function is nothing but the product of the probability density function ![]() and

and ![]() . In our example, the functions

. In our example, the functions ![]() and

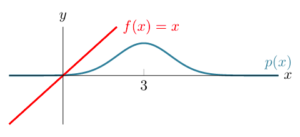

and ![]() look like:

look like:

Above, it is not clear how we can take the integral of ![]() . But, if

. But, if ![]() was shifted such that it was crossing the

was shifted such that it was crossing the ![]() -axis at the mean of the Normal distribution, we would have symmetric functions. This shifted function is

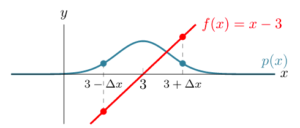

-axis at the mean of the Normal distribution, we would have symmetric functions. This shifted function is ![]() :

:

As you can see above both ![]() and

and ![]() are symmetric around

are symmetric around ![]() (the mean of the Normal distribution). The symmetry is such that if we consider two mirrored points

(the mean of the Normal distribution). The symmetry is such that if we consider two mirrored points ![]() and

and ![]() , the probability density function is equal, i.e.,

, the probability density function is equal, i.e., ![]() for any positive

for any positive ![]() . But, the function

. But, the function ![]() is negated for those points, i.e.,

is negated for those points, i.e., ![]() . As you can guess, the product of

. As you can guess, the product of ![]() and

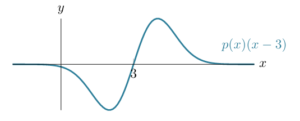

and ![]() is also symmetric:

is also symmetric:

Given this symmetry, it is easy to see that the integral of ![]() for the entire

for the entire ![]() -axis is zero. This means

-axis is zero. This means ![]() . Now, we can use this to solve our expectation problem:

. Now, we can use this to solve our expectation problem:

![]()

2) Just do the math:

So far, we have used the shape of the functions ![]() and

and ![]() to derive the expected value. In practice, visualizing a function and underlying distribution does not always result in the value of an expectation, but it gives us intuitions on how to attack the problem. Here, we will try to solve the integral directly using techniques from calculus. We have:

to derive the expected value. In practice, visualizing a function and underlying distribution does not always result in the value of an expectation, but it gives us intuitions on how to attack the problem. Here, we will try to solve the integral directly using techniques from calculus. We have:

![Rendered by QuickLaTeX.com \begin{align*} \mathbb{E}_{p(x)}[x] &= \mathbb{E}_{p(x)}[x - 3 + 3] = \mathbb{E}_{p(x)}[x-3] + \mathbb{E}_{p(x)}[3] \\ &= \int_{-\infty}^{+\infty}\frac{1}{\sqrt{2\pi}}e^{-\frac{(x-3)^2}{2}}(x-3)dx + 3 \end{align*}](http://latentspace.cc/wp-content/ql-cache/quicklatex.com-c9dd276614f1374eac9dd8674a6387c1_l3.png)

Note that here we use the definition of Normal distribution, i.e., ![]() . Now, we can introduce the change of variable

. Now, we can introduce the change of variable ![]() and

and ![]() :

:

![Rendered by QuickLaTeX.com \begin{align*} \mathbb{E}_{p(x)}[x] &= \int_{+\infty}^{+\infty}\frac{1}{\sqrt{2\pi}}e^{-u}du + 3 \\ &= - \frac{1}{\sqrt{2\pi}} e^{-u} \big]_{+\infty}^{+\infty} + 3 = 0 + 3 = 3 \end{align*}](http://latentspace.cc/wp-content/ql-cache/quicklatex.com-faa6460d0126695205b6c743ebce9a89_l3.png)

3) Draw Samples:

The most general approach for solving an expectation is to use a sampling-based method called the Monte Carlo method. This approach can be used only if we can draw samples from distribution ![]() . Let’s represent a set of

. Let’s represent a set of ![]() samples from

samples from ![]() by

by ![]() . Based on the Monte Carlo method, we have:

. Based on the Monte Carlo method, we have:

![Rendered by QuickLaTeX.com \[ \mathbb{E}_{p(x)}[x] \approx \frac{1}{L} \sum_{l=1}^{L} x^{(l)}, \]](http://latentspace.cc/wp-content/ql-cache/quicklatex.com-a496c43c6a64981b576e6e5f53bf3619_l3.png)

which is to say that the expected value is approximated by taking the average of samples drawn from the distribution. For example, let’s draw 10 samples from ![]() :

:

![]()

If we take the average of these 10 samples, we will have ![]() Note that this estimate is not exactly the same as the estimate we get with the previous approaches. This is because the Monte Carlo method provides a stochastic estimation of the integral. This estimation is unbiased meaning that on average it is always equal to the true value. However, it contains variance (i.e., deviation from the true value). We will discuss this in more details later.

Note that this estimate is not exactly the same as the estimate we get with the previous approaches. This is because the Monte Carlo method provides a stochastic estimation of the integral. This estimation is unbiased meaning that on average it is always equal to the true value. However, it contains variance (i.e., deviation from the true value). We will discuss this in more details later.

Summary

In this post, I reviewed some basic properties of expectations and a few simple techniques for computing them. Among these techniques, the Monte Carlo method is widely used in ML especially if there is no closed-form derivation for an expectation. However, I typically find visualizing functions and distributions very informative, although it may not yield to a solution directly.

As you may know, I am just about to start this blog, and I need your help to reach out to a greater audience. So, if you like this post, please don’t hesitate to share it with your network. If you have any suggestion, please post it in the comments section.

I like the clarity and pace of your writing. Tnx

Keep it up please

Thank you!

Very clear explanation, please keep it up!

very good blog post

Thanks for sharing this. Very easy to follow and well written. I am looking forward for the next post.